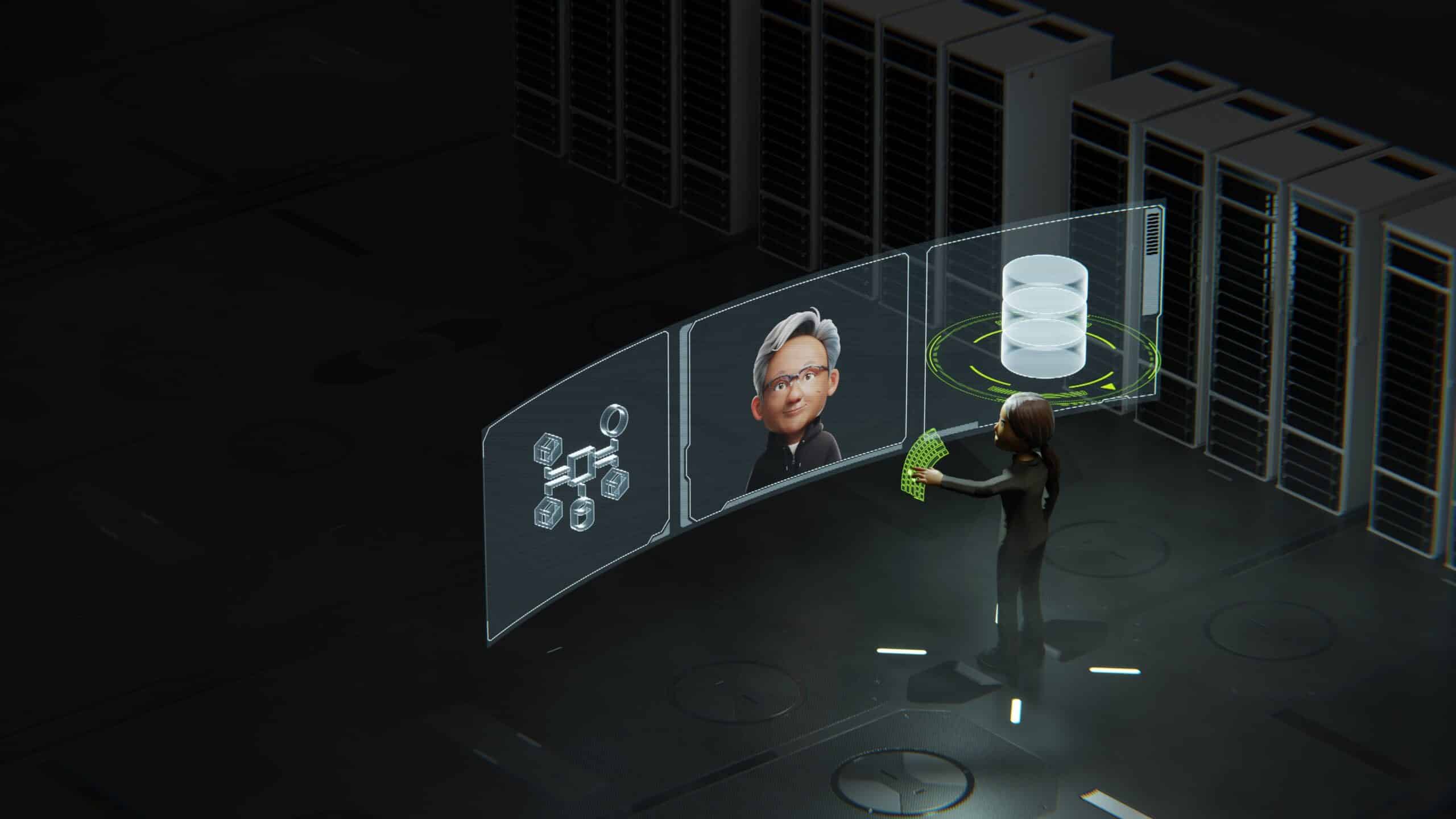

IBM and Nvidia have officially deepened their collaboration, unveiling a suite of powerful integrations designed to accelerate the deployment and scalability of enterprise AI. The announcement was made during the Nvidia GTC 2025 conference in San Jose, California, and marks a pivotal moment in enterprise adoption of generative and agentic AI technologies.

Reinforcing AI Infrastructure for the Enterprise

At the heart of the partnership is the integration of Nvidia’s AI Data Platform into IBM’s hybrid cloud ecosystem. This powerful combination is aimed at streamlining the deployment of AI workloads across cloud, data centers, and on-premise environments. The integration prioritizes open standards, data governance, and performance optimization to support scalable AI operations.

Tackling Unstructured Data with New Storage Innovations

To address the growing challenges of unstructured data in AI applications, IBM is bringing content-aware storage capabilities to its IBM Fusion hybrid cloud. Utilizing Nvidia BlueField-3 DPUs and Spectrum-X networking, this innovation boosts GPU-to-storage communications, significantly enhancing the performance of retrieval-augmented generation (RAG) models and other compute-intensive tasks.

Accelerating AI Deployment with NeMo and NIM Microservices

The companies are leveraging Nvidia NeMo Retriever microservices, built on the Nvidia NIM framework, to enable rapid AI model deployment across diverse environments. This microservice architecture is key to deploying generative AI at scale, whether in the cloud, data center, or edge.

IBM’s watsonx Meets Nvidia’s NIM

IBM is also integrating its watsonx AI platform with Nvidia NIM microservices to deliver enhanced access to a broad ecosystem of models. This synergy ensures not only streamlined development and deployment, but also robust monitoring and compliance for responsible AI adoption.

In line with these advancements, IBM Cloud will also introduce Nvidia H200 instances—designed for high-bandwidth, large-memory AI workloads—to further support enterprise needs for foundation models and data-intensive AI applications.

Enterprise Support Services for Scalable AI

To help enterprises fully capitalize on this new AI infrastructure, IBM Consulting is launching dedicated AI Integration Services. These services will focus on optimizing agentic and generative AI workloads across hybrid environments, utilizing Red Hat OpenShift and Nvidia technologies.

As part of Nvidia’s wider GTC 2025 initiatives, this collaboration complements other major moves in AI hardware and software. For example, Nvidia’s recent developments in AI data center photonics demonstrate the company’s broader push toward transforming enterprise AI infrastructure.

A Strategic Vision for the Future of AI

“IBM is focused on helping enterprises build and deploy effective AI models and scale with speed,” said Hillery Hunter, CTO and general manager of innovation at IBM Infrastructure. “Together, IBM and Nvidia are collaborating to create and offer the solutions, services, and technology to unlock, accelerate, and protect data—ultimately helping clients overcome AI’s hidden costs and technical hurdles to monetize AI and drive real business outcomes.”

For more on Nvidia’s latest AI partnerships, explore how General Motors is using Nvidia to power AI-driven vehicles and smart factories.